Introduction

If you've built a design system, you've done real work. You've taken the chaos of ad-hoc styling decisions and created coherence: patterns that hold, components that compose, and a visual language that means something.

That work doesn't stop being valuable, but it was built for a specific audience: human developers who learn through code review, pairing, and accumulated exposure. They absorb implicit rules over months. They develop taste for "how we do things here."

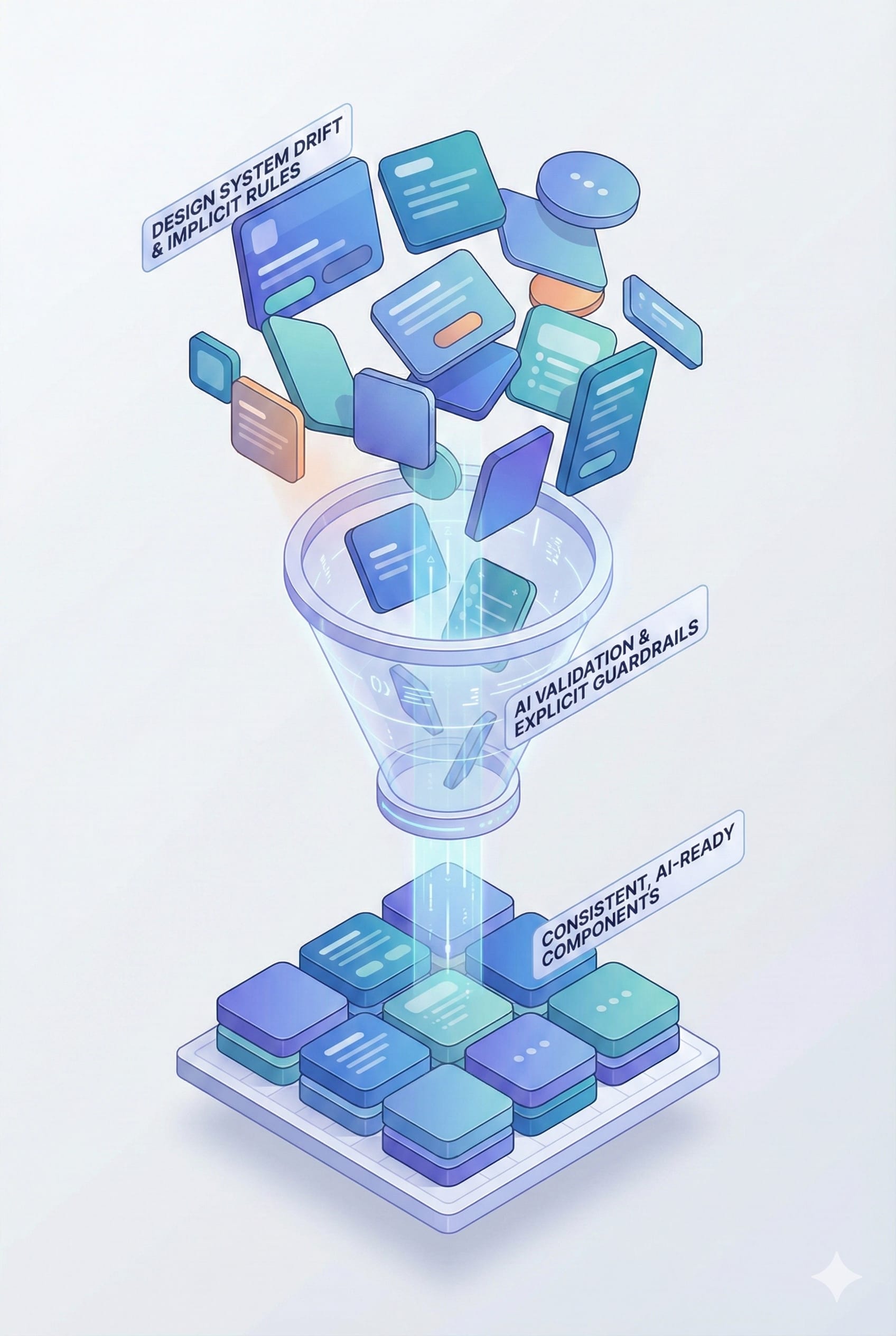

AI doesn't get that ramp-up time. It parses what's documented and fills gaps with patterns from training data. The result is often code that's technically correct but visually off (not broken, just not yours).

This is a translation problem, not a design system problem. Your system has knowledge AI can't access yet. These are the questions teams ask us most often about bridging that gap and what we've found actually works.

A note on context: This assumes you have a component library with enough maturity that there are patterns worth preserving. If you're earlier than that, some of this advice inverts. You might build AI-native from the start rather than retrofitting. We touch on that toward the end.

Why isn't AI following our design system?

AI tools only know what's explicitly documented in places they can see. Your design system probably has implicit rules (things experienced developers "just know") that have never been written down, or live in Figma files and wikis that AI never parses during code generation.

The general principle: if a rule isn't in or near the code, AI is guessing. Sometimes it guesses well. Often it doesn't.

There are edge cases, like AI with web browsing, very large context windows, or retrieval-augmented setups. But for most teams using Copilot, Cursor, or Claude in standard configurations, code-adjacent documentation is what AI actually sees.

Where should design decisions be documented?

In the code itself. Comments directly in component files, near the decisions they describe.

Not PDFs. Not Confluence. Not Figma annotations. These are fine for human reference, but AI parses code, it doesn't browse your documentation site during generation.

Example of what works:

// Premium cards use diagonal cut corners (16px, top-right)

// Design system rule: Visual differentiator for paid features

// Do NOT use for free-tier components

This takes a rule that lives in a designer's head and puts it where AI will actually encounter it.

What we've seen does not work: Responding to AI inconsistency by writing more documentation in the same places. Longer READMEs, more detailed Confluence pages, better Figma annotations. The problem isn't the volume of information, it's where it’s located. Documentation AI doesn't see can't help AI, no matter how thorough.

How should we name our design tokens?

Semantically, not visually.

color-blue-500 tells AI nothing about when to use it. color-premium-tier-indicator tells AI exactly when and when not to.

| Instead of | Use |

|---|---|

| color-blue-500 | color-premium-tier-indicator |

| spacing-16 | spacing-form-field-gap |

| font-size-large | font-size-section-heading |

Your token names should answer "when do I use this?" not "what does this look like?"

Honest caveat: We don't have controlled studies proving how much this improves AI output. That research barely exists yet. This is pattern-matching from what we've observed across projects, not science. Our working theory is that semantic naming gives AI contextual signals it can't infer from visual property names. But we're reasoning from experience, not data. Your mileage may vary, and we'd be curious to hear what you find.

What's an MCP server and do we need one?

Model Context Protocol (MCP) servers let AI tools query your design system programmatically, asking "what component should I use for X?" and getting a structured answer, rather than inferring from code patterns.

This is an emerging pattern and we're still learning what works. The honest answer is that most teams don't need this yet. For smaller systems, well-structured comments and semantic naming get you most of the way. The complexity of maintaining an MCP server may not pay off.

For larger systems (hundreds of components, complex conditional rules, multiple product surfaces) a queryable interface starts making sense. The principle is that AI should be able to ask your design system questions, not just read it.

If you want to explore this:

- Anthropic's MCP documentation is the starting point: https://modelcontextprotocol.io (https://modelcontextprotocol.io)

- The basic architecture: a server that exposes your design system rules through a standardized protocol that AI tools can call

- Lighter-weight alternatives: Cursor rules files, Claude project instructions, or structured markdown that gets included in context

We're experimenting with this ourselves and don't have strong conclusions yet about where the complexity threshold is. If you try it, we'd genuinely like to hear what you learn.

How do we handle complex patterns like dashboards?

Dashboards, data tables, multi-step forms: these are where AI goes generic. Its training data looks like every SaaS app you've ever seen, and that's what it produces by default.

What seems to help: pattern-specific context documents.

The process we've used:

1. Gather 3-5 production examples of the pattern done well

2. Ask AI to analyze them: "What's consistent across these? What seems intentional?"

3. Review and correct what AI articulates. It will miss things, sometimes obvious things

4. Save that as a reference document AI can access during generation

The idea is to give AI your patterns rather than letting it default to generic ones.

Honest caveat: This is more art than science. We've seen it help, but we've also seen teams create elaborate pattern documentation that AI still ignores in favor of its training. The results seem to depend on how the documentation is structured, how it's surfaced to AI, and factors we don't fully understand yet. Consider this a promising direction rather than a proven solution.

Should we automate design system validation?

Yes, but not just to catch violations, also to teach.

When a test fails with specific feedback explaining what violated the rule and why, that feedback becomes a signal. AI adjusts on the next attempt. Silent failures (or failures that just say "failed") teach nothing.

What effective validation looks like:

- Catches violations before merge

- Names the specific rule that was broken

- Suggests the correct approach

- Creates a feedback loop that compounds over time

What doesn't work: Validation so strict that AI output fails constantly. That creates noise, not signal. The goal is meaningful feedback on meaningful violations. They’re tight enough to catch real drift, but loose enough that AI can actually succeed.

How do we know if it's working?

One metric matters: what happens after AI generates code?

- Significant rework (often easier to start over): Your design system isn't speaking AI's language yet

- Moderate fixes (component swaps, structural changes): Gaps remain but foundation is there

- Minor tweaks (spacing, edge cases): You're ahead of most teams

- Mostly production-ready: Genuinely AI-native

This is subjective. Hard to measure precisely. But you’ll know it when you feel it. The shift from "AI is creating work" to "AI is saving work" is real, even if the percentage is fuzzy.

Everything else (documentation coverage, token naming, validation rules) is a means of moving down this ladder.

How long does this take?

Honest answer: we don't have enough data to give confident timelines.

The range we've seen for teams with mature design systems: meaningful improvement in 3-6 months. But "meaningful" is doing a lot of work there. Some saw faster results on high-frequency components while long-tail patterns took much longer.

Teams without a coherent design system are solving two problems at once. Sometimes that's right, building to be AI-ready from the start. But timelines vary more and depend heavily on scope.

The mistake we see most often: Trying to fix everything at once. Start with your highest-frequency components. Get those documented, semantically named, and validated. Learn what works in your specific context. Expand from there.

What if AI keeps making the same mistakes?

That's a signal. Repeated mistakes mean there's a rule AI can't see.

When you catch a pattern:

- Identify the implicit rule being violated

- Document it in the code where AI will find it

- Add validation that catches future violations with specific feedback

Each repeated mistake is an invitation to make something explicit that was previously unrecorded knowledge. Tedious, but valuable. You're building institutional memory that doesn't walk out the door when someone leaves.

We don't have a design system yet. Should we build one first?

You have a design system, it's just implicit. Every product has patterns, even if undocumented and inconsistent.

The question is whether to formalize before adding AI to your workflow. Our take: doing them together often makes more sense than sequentially. AI becomes a forcing function for documentation. The work of teaching AI is the work of systematizing.

The risk of waiting: AI is already in your developers' tools. They're generating code against an invisible design system. Without explicit rules, you're accumulating inconsistency that's harder to unwind later.

If you're pre-design-system, the advice above partially inverts. Instead of retrofitting, you're asking "how do we build AI-native from the start?" Start with the AI-readable documentation and semantic naming as you create components, rather than adding it after.

What's the cost of not doing this?

We want to be honest that this question has an obvious answer coming from a consultancy that sells design system work. Take this with appropriate skepticism.

What we observe: teams that don't address this aren't standing still. They're accumulating drift as AI-generated code deviates from intended patterns. Each small inconsistency is ignorable; the aggregate becomes significant.

A concrete example: One team we talked to had been using AI coding tools heavily for about 18 months without design system guardrails. When they audited, they found their button component had been implemented 23 different ways across the codebase. AI had generated variations each time rather than reusing the canonical version. None were "wrong." All were slightly different. The cleanup took longer than building proper AI documentation would have.

That's one data point, not a universal law. Some teams can probably ignore this for a while without major consequences, especially if AI isn't heavily in their workflow yet or if their product context is more forgiving of visual inconsistency. Others are in contexts where brand precision directly impacts revenue and drift is more urgent.

We can't tell you which situation you're in from the outside.

Where this comes from

We're Rangle, a consultancy that builds design systems and AI-augmented development workflows. We've done this for UNIQLO, Kenvue, Sanofi, and others.

This content isn't neutral. We have obvious incentives. If you read this and think "we need help," we'd like you to call us. We've tried to write what's actually true regardless, but you should weigh what we say knowing we're not disinterested observers.

Take what's useful. Discard what doesn't fit your context.

If your design system isn't accelerating AI-enabled development (or you're not sure where to start) we're happy to spend 30 minutes looking at your situation. No pitch, just an honest read on where you are.